When AI-First Becomes Automation-First

Four Dangers of Confusing Intelligence with Efficiency

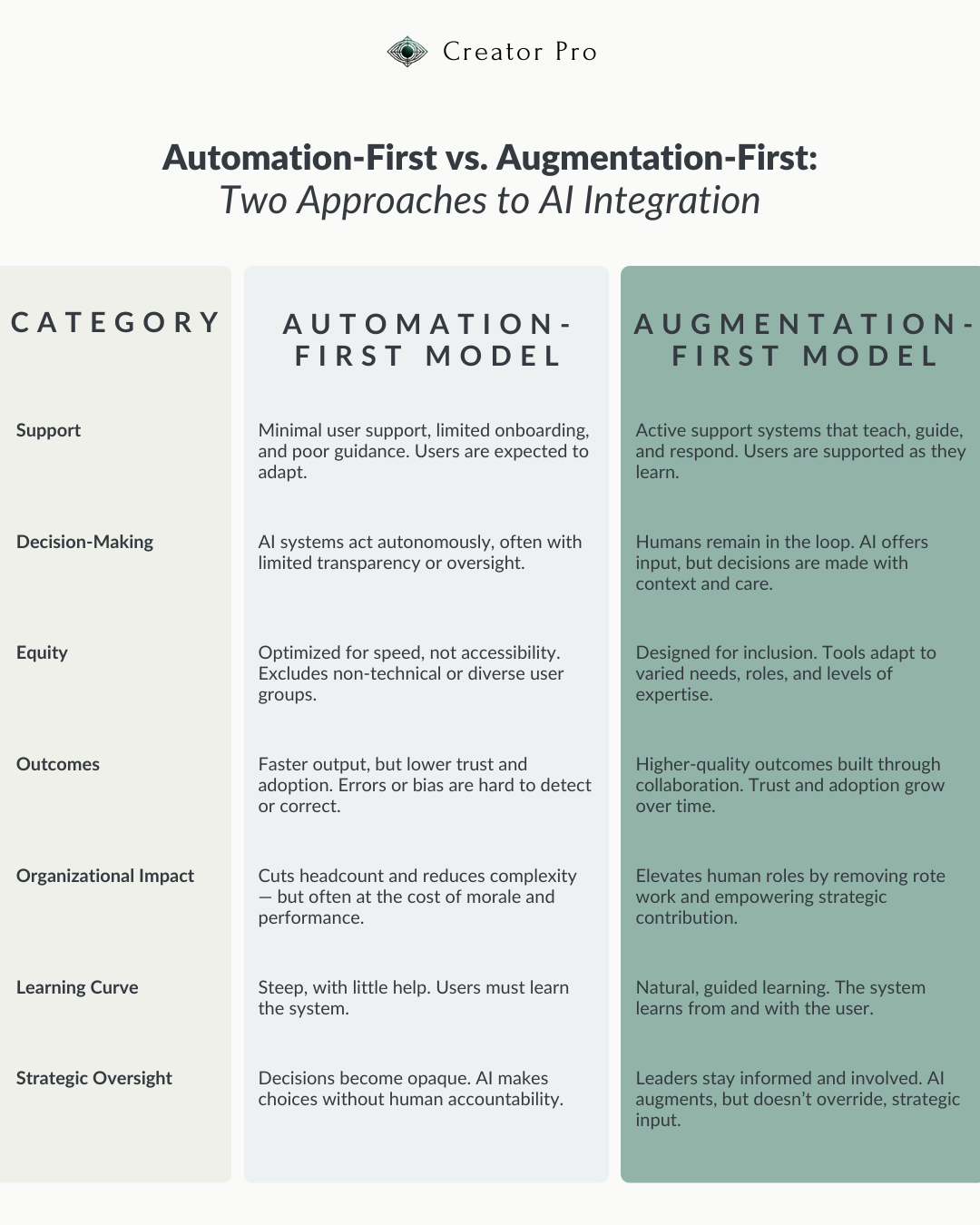

Over the past year, “AI-first” has become a strategic mantra for businesses, institutions, and platforms racing to keep pace with rapid technological change. But somewhere along the way, a dangerous shift has taken hold — one that quietly replaces intelligence with automation, and strategy with speed.

Instead of building AI systems to augment human ability, too many organizations are using AI to eliminate human involvement entirely. The result? A growing body of systems that prioritize scale over sense-making, and efficiency over empathy.

Here are four critical risks that emerge when “AI-first” becomes “automation-first” — and what a more human-centered approach looks like instead.

Danger #1: Humans Are Treated as Inefficiencies

In automation-first models, humans are often viewed as slow, error-prone, and costly. This mindset doesn’t just show up in workflows — it shapes entire organizational decisions, from hiring to product development. The message becomes clear: if it can be automated, it should be.

The problem? Many tasks that appear automatable on the surface — like responding to customers, reviewing data, or designing content — actually require human nuance, empathy, or ethical judgment.

When humans are seen as inefficiencies, we stop investing in their development. We design systems that bypass their input, rather than amplifying their potential. And in doing so, we miss out on creativity, context, and insight — the very things AI can’t replicate.

Danger #2: Customer Support and Training Collapse

Automation-first systems often cut corners in areas that require the most support: onboarding, customer service, and human connection.

We’ve all experienced it: a chatbot that can’t understand your issue. A help center full of circular articles. A platform that quietly updates features with no explanation, leaving users frustrated and unsupported.

This isn’t just poor UX — it’s structural neglect. In a rush to “streamline,” organizations are removing the very scaffolding that enables trust and retention. Training gets minimalized. Feedback loops vanish. And the system becomes harder to use for anyone outside the tech-savvy elite.

In AI-first systems that prioritize humans, support isn’t an afterthought — it’s central to adoption. Teaching becomes part of the product, and users are treated as partners, not problems.

Danger #3: Inequality Gets Reinforced — Not Solved

There’s a popular narrative that AI will democratize access to information, opportunity, and creativity. But automation-first strategies often do the opposite.

When tools are designed without real consideration for neurodiversity, age, education levels, or cultural context, they tend to serve one type of user: the technically proficient, English-speaking professional who already knows how to interact with AI.

Others — including senior leaders, neurodivergent thinkers, and global users — are left out. They find the tools confusing, the expectations unclear, and the support insufficient. And when they don’t adopt the tools quickly, they’re blamed for “falling behind.”

True AI-first systems should narrow gaps — not widen them. But when automation becomes the goal, it often reinforces the very inequalities AI was supposed to help solve.

Danger #4: Strategy and Accountability Fall Through the Cracks

Finally, one of the most overlooked risks of automation-first thinking is the slow erosion of strategic oversight.

When AI starts making decisions — whether about hiring, pricing, targeting, or content moderation — organizations can become disconnected from the reasoning behind those decisions. Leaders delegate too much authority to black-box models without asking critical questions:

- Why did the AI make that choice?

- What values are embedded in the output?

- Who’s accountable if it goes wrong?

Without human judgment and discernment, AI systems can amplify bias, optimize for the wrong goals, and make decisions that clash with an organization’s values. And without strategic oversight, those risks compound over time.

The Better Alternative: Human-AI Partnerships

So what does “AI-first” look like when it’s done right?

It looks like systems that treat AI as a thinking partner — not a replacement. It looks like workflows that speed up repetitive tasks so humans can focus on what matters: creativity, leadership, communication, and innovation.

Some real-world examples:

- A team leader uses AI to draft internal reports, then refines them with organizational insight.

- A solopreneur uses AI to brainstorm new offers, but relies on their intuition and audience data to make final decisions.

- An HR manager uses AI to review employee feedback patterns — not to replace their job, but to uncover blind spots and support better outcomes.

These are augmentation models, not automation models. And they don’t just protect jobs — they enhance them.

Bottom Line

Confusing “AI-first” with “automation-first” isn’t just a terminology issue — it’s a directional mistake. It leads to products that underperform, users that disengage, and systems that overlook the very humans they were meant to empower.

As professionals navigating this new landscape, the goal isn’t to resist AI — it’s to shape how it’s integrated. Because if we want an AI-powered future that works for more people, we can’t afford to leave people out of the process.