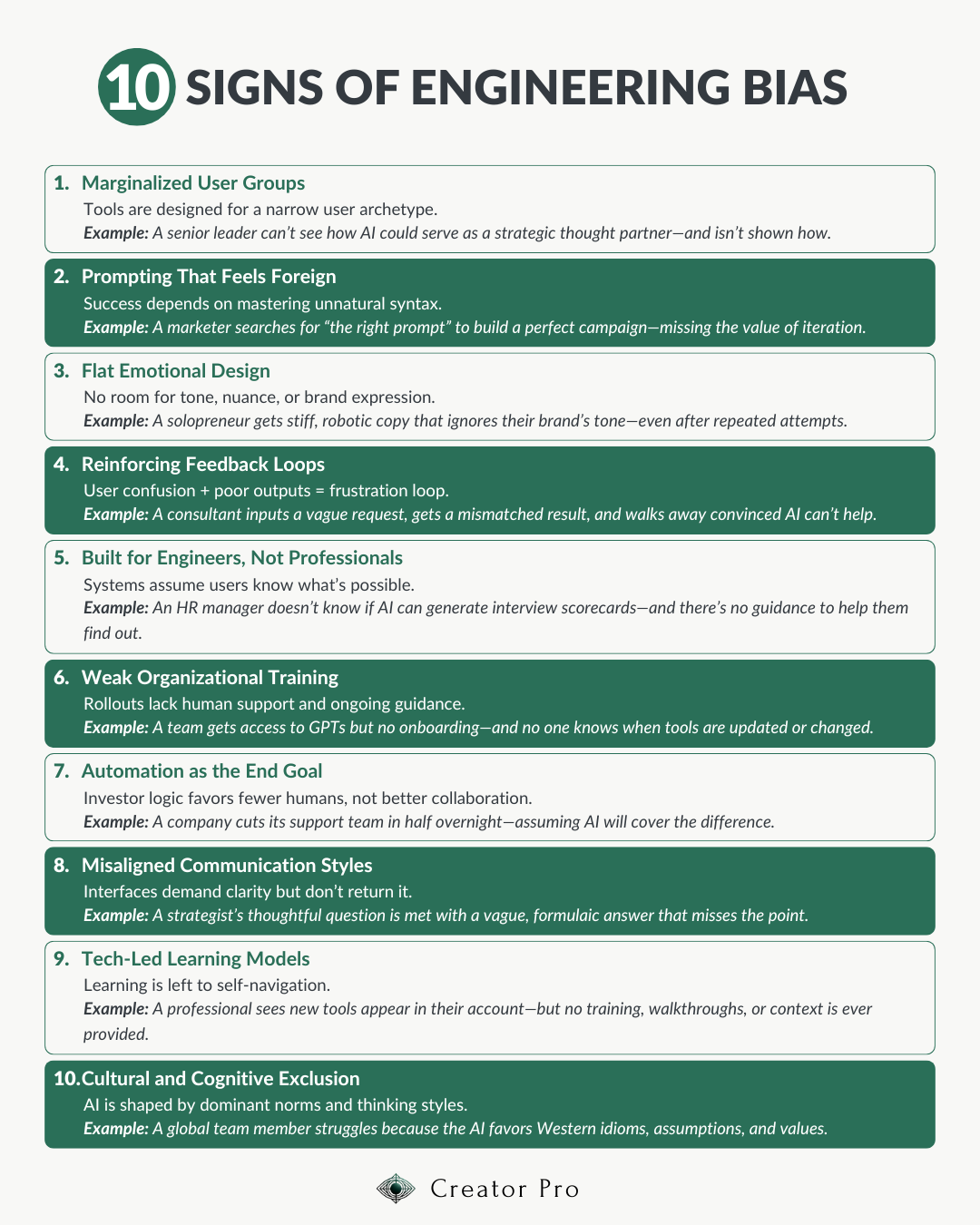

Top 10 Ways Engineering Bias Is Undermining Global AI Adoption

AI is becoming a foundational part of modern professional life. And yet for many professionals, the experience of trying to adopt AI feels frustrating, confusing, and alienating. This is because most AI tools were built with one kind of user in mind: engineers. This deeply embedded engineering bias is silently shaping how AI is built, taught, and who actually benefits. The consequences of this are starting to show.

This article breaks down 10 of the most damaging impacts of engineering bias in AI design and rollout. If you've been struggling to use AI effectively, this list may help explain why.

1. Marginalized User Groups

Women, neurodivergent thinkers, and senior leaders are being sidelined. Engineering-led design often fails to consider diverse thinking styles, communication preferences, and emotional nuance. As a result, many professionals feel alienated from tools that don’t reflect their cognitive strengths or leadership approaches—despite having decades of valuable experience.

2. Unnatural Prompting Expectations

Success depends on mastering a language that doesn't feel natural. Most AI tools expect users to interact through prompt syntax designed by engineers. But for the majority of people, that structure feels awkward, performative, or even intimidating—turning every interaction into a guessing game.

3. Emotionally Flat Interfaces

AI systems lack emotional intelligence by design. Most AI interfaces aren’t built to respond to tone, empathy, or relational cues. That’s a problem when so many professionals rely on intuition, nuance, and contextual communication in their daily work.

4. Feedback Loops Reinforce Disengagement

Engineering bias creates a destructive feedback loop. Our research uncovered a pattern: users follow the guidance they’re given—often pre-written prompts or rigid templates—but don’t get the output they expect. Because they haven’t been taught what the AI can or can’t do, they’re left to guess. Misaligned expectations and inconsistent results lead to stress and eventual abandonment. The tools aren’t improving because the user experience isn’t being centered.

5. Enterprise Tools Are Gatekept

Most AI systems are only intuitive to engineers. In enterprise environments, adopting AI still depends on technical gatekeepers. Training is sparse, language is technical, and usability is often an afterthought. This creates a divide between those who can operate the tools and those who actually need to benefit from them.

6. Poor Organizational Support

Training models are outdated and unscalable. Organizations often roll out AI with a handful of workshops or a video series—then assume adoption will take care of itself. But meaningful integration takes time, iteration, and feedback. Most professionals are being handed a powerful tool with little to no support in using it effectively.

7. Investor Pressure to Automate

Tech culture is racing toward headcount reduction. Rather than focusing on how AI can elevate people’s potential, many AI tools are being built to eliminate the need for people altogether. This mindset fuels anxiety in the workplace and drives adoption strategies that prioritize automation over augmentation.

8. Communication Style Mismatch

Engineered systems don't speak how most people think. Many AI tools respond best to structured, direct input. But human communication is messy, layered, and contextual. When systems don’t understand the nuance in user intent, users are blamed for not being “clear enough.”

9. Tech-Centric Learning Curves

Most training materials assume technical fluency. Even well-designed tools become frustrating if the learning curve is steep. Tutorials, onboarding flows, and help documents often reflect engineering logic—not how most people learn, process, and apply information.

10. Cultural and Cognitive Exclusion

A narrow worldview is being embedded into global systems. Engineering bias often reflects Western, male-dominated perspectives—and those biases shape everything from interface design to training data. Professionals from diverse cultural and cognitive backgrounds face an uphill battle just to be understood by the system.

Why This Matters

If AI is going to succeed at a global scale, it can’t just work for one type of thinker, promote one learning style, and choose one cultural lens. It has to work for everyone.

For professionals navigating AI adoption right now, it’s easy to feel overwhelmed and discouraged. This article was written to show that the problem is not with you, it’s with the system that was designed without you in mind.

It’s time for a new system.

One that centers human intelligence, supports emotional nuance, adapts to diverse thinking styles, and treats AI as a co-creator for humanity—not its replacement.