The Ubiquity of Prompt Engineering and the Misalignment Problem

Introduction

Over the past five years, prompt engineering became nearly synonymous with effectively interacting with AI systems. Driven by necessity, early large language models (LLMs) such as GPT-2 and GPT-3 required carefully structured prompts to produce reliable outputs. Engineers and early adopters developed a quasi-formal discipline of prompt engineering, akin to coding in natural language, shaping the AI industry's educational focus between 2020 and 2025.

However, as AI rapidly evolved, this once-essential skill has become increasingly redundant. Today's advanced systems—including OpenAI’s GPT-4, Anthropic’s Claude, and Google’s Gemini—excel in understanding plain-language instructions, maintaining context over multi-turn dialogues, and even responding intuitively to voice-based interactions. These developments have highlighted a significant misalignment: the continued heavy emphasis on prompt engineering no longer matches mainstream user needs, creating unnecessary complexity and friction.

“The dominance of prompt engineering creates unnecessary friction, alienates mainstream users, and stalls widespread adoption. Transitioning to conversational AI interactions is crucial to bridge this gap, enabling broader, intuitive, and inclusive AI adoption.”

This misalignment has substantial economic and social implications—particularly limiting productivity and creating barriers for non-technical users, women, senior leaders, and neurodiverse individuals. To achieve broader, inclusive adoption of AI, the industry must move decisively beyond prompt engineering and embrace conversational interaction paradigms.

The sections below detail prompt engineering’s historical dominance, explore its origins, clarify why it has become redundant, explicitly identify the friction it creates, and advocate strongly for conversational AI as the new standard.

The Rise and Ubiquity of Prompt Engineering (2020–2025)

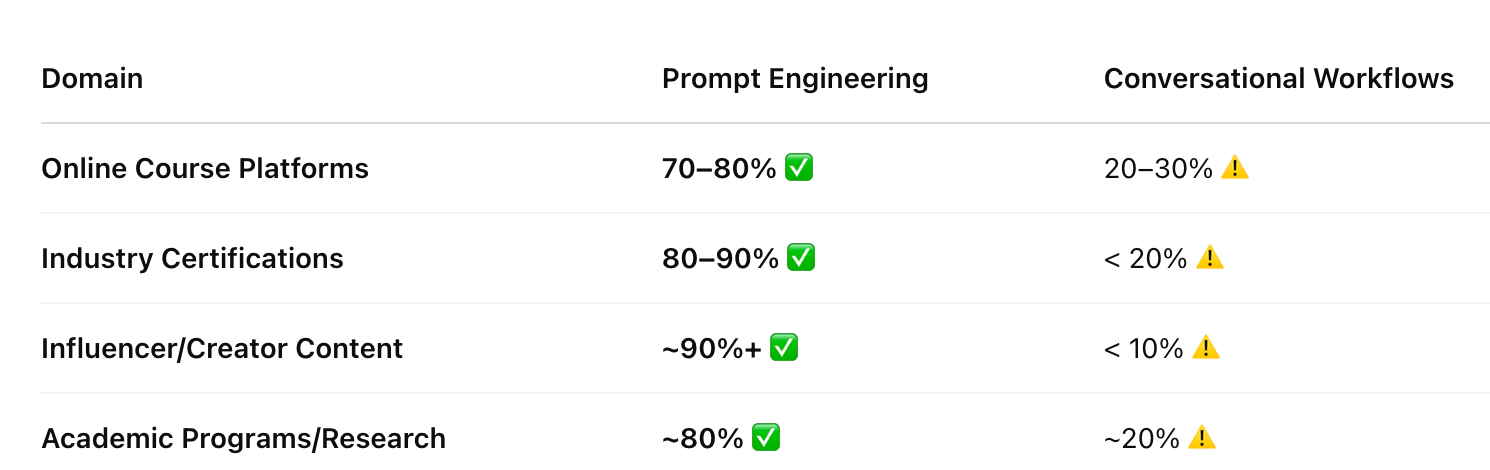

Between 2020 and 2025, prompt engineering emerged as the predominant skill taught to AI practitioners and general users. An estimated 70–90% of beginner-focused generative AI training content concentrated exclusively on prompt crafting techniques, overshadowing more intuitive conversational approaches. This dominance was reflected across major online educational platforms, professional training programs, technical blogs, and industry media:

Online Course Platforms:

Platforms like Coursera, edX, and Udemy featured extensive courses on prompt engineering. For example, Coursera’s Prompt Engineering specialization from Vanderbilt University attracted over 77,000 enrollments (Coursera Prompt Engineering Specialization), while Class Central aggregated nearly 1,500 prompt-engineering courses by 2025 (Class Central Prompt Engineering).

Professional Training and Certifications:

LinkedIn Learning heavily promoted prompt engineering through a dedicated learning path titled “Develop Your Prompt Engineering Skills,” encompassing 9 detailed courses. Popular offerings like “Prompt Engineering: How to Talk to the AIs” and “How to Research and Write Using Generative AI Tools” drew over 700,000 and 670,000 viewers respectively (LinkedIn Learning Prompt Engineering). Similarly, IBM offered an Introduction to Prompt Engineering on edX and enrolled over 46,800 students in their Prompt Engineering for Everyone course via Cognitive Class (IBM Prompt Engineering for Everyone).

Technical Blogs and Industry Media:

Industry publications and influential blogs extensively discussed prompt-writing techniques. Medium articles and specialized blogs frequently published guides on creating effective prompts, framing prompt engineering as a critical skill. The World Economic Forum notably observed a “huge interest in prompt engineering” post-GPT-4 launch, positioning it as a vital future skill (World Economic Forum on Prompt Engineering).

YouTube Tutorials and Webinars:

YouTube saw numerous tutorials highlighting prompt engineering, some achieving rapid popularity. For instance, a comprehensive “Prompt Engineering Tutorial – Master ChatGPT and LLM Responses” video amassed over 670,000 views within months (YouTube Prompt Engineering Tutorial).

The prompt engineering phenomenon extended to formal certifications and dedicated job roles. Companies posted positions for “Prompt Engineers” or “AI Prompt Specialists,” with salaries occasionally exceeding six figures, underscoring the perceived strategic importance of this skill (WEF on Prompt Engineering Roles).

Despite this heavy educational and professional emphasis, instructional content rarely promoted conversational or intuitive AI use. Instead, new users were taught to think and communicate explicitly as prompt engineers—using structured patterns, syntax frameworks, and detailed query templates (e.g., Role-Instruction-Context method, zero-shot vs. few-shot). This created an implicit assumption that effective AI interaction inherently required specialized technical querying skills, a perspective increasingly challenged by advancements in conversational AI.

This overwhelming emphasis trained users to think in structured prompts rather than conversationally, inadvertently reinforcing the belief that effective AI interaction required specialized technical skills. This in turn perpetuates the engineering bias.

Origins and Historical Necessity of Prompt Engineering

Prompt engineering became ubiquitous because early generative AI models, notably GPT-2 (2019) and GPT-3 (2020), required meticulously structured prompts to yield reliable outputs. These models were powerful yet brittle, producing drastically different results based on subtle wording changes. Users quickly learned to frame their queries with precision—effectively developing a skill akin to “programming” in natural language (Gwern on GPT-3 prompt brittleness).

The introduction of GPT-3 marked a significant leap forward due to its scale (175 billion parameters) and few-shot learning capability. However, interacting with GPT-3 via API meant users needed to carefully engineer text-based prompts to clearly convey their intentions. Researchers and early adopters humorously referred to this process as "casting spells," highlighting its experimental and somewhat esoteric nature (Google Developers on Prompt Programming).

Prompt engineering quickly formalized as users identified repeatable strategies, such as the Persona Pattern (assigning the AI a specific role), Few-Shot Prompting (providing examples), and later, Chain-of-Thought prompting (explicit step-by-step reasoning). These strategies became codified practices, widely disseminated through courses, blogs, and corporate training (IBM Cognitive Class on Prompt Techniques).

Historically, prompt engineering mirrored earlier technology adoption phases. Just as early web users mastered HTML or Boolean search queries before user-friendly interfaces became prevalent, early AI users had to adapt their behavior to communicate effectively with limited, opaque systems. Another parallel lies in early computing’s command-line interfaces, which required users to learn precise command syntax before graphical user interfaces simplified interaction. Prompt engineering served an analogous role—a necessary bridge to utilizing the nascent capabilities of early AI models effectively (Tim Wappat on Early AI Adoption).

Thus, the emergence of prompt engineering was historically essential, a solution driven by technological constraints. Early adopters readily embraced this complexity as it enabled them to unlock the full potential of generative AI. However, this phase was never intended as the end goal. With modern AI systems now inherently capable of intuitive conversational interactions, prompt engineering is shifting from necessity toward redundancy.

Why Prompt Engineering is Becoming Redundant

The rapid evolution of modern AI systems is diminishing the need for traditional prompt engineering. AI platforms such as OpenAI’s GPT-4, Anthropic’s Claude, and Google’s Gemini have advanced significantly in interpreting natural, conversational language, making rigid prompt structuring increasingly unnecessary.

Conversational Interfaces as Default:

The release of ChatGPT in late 2022 represented a major paradigm shift, embedding GPT-3.5 within an intuitive conversational interface. Unlike previous generations, ChatGPT users no longer required prompt engineering expertise; they could simply engage naturally. Its phenomenal growth—100 million users in two months—underscores a clear preference for accessible, conversation-based interactions over engineered prompts (Reuters on ChatGPT growth).

Improved Instruction-Following and Alignment:

Recent models incorporate advanced alignment techniques such as reinforcement learning from human feedback (RLHF), significantly enhancing their ability to accurately interpret simple, direct requests. For instance, GPT-4 no longer requires complex, explicitly formatted prompts to explain concepts clearly, reducing the user's cognitive load (Google DeepMind on Gemini’s reasoning capabilities).

Multimodal and Voice Interactions:

The introduction of voice-enabled interfaces and multimodal capabilities has further eroded the necessity for structured prompts. For example, ChatGPT’s voice update in late 2023 allows users to interact naturally through spoken language, dramatically simplifying interaction and reducing reliance on prompt construction (Reuters on ChatGPT voice capabilities).

Chain-of-Thought Reasoning Integrated Naturally:

Advanced techniques once reserved for carefully engineered prompts, such as chain-of-thought reasoning, are increasingly automated or initiated simply by asking models to "think step-by-step." This conversational approach allows users to guide AI through complex tasks interactively rather than through meticulously structured initial prompts (IBM on Chain-of-Thought).

Declining Industry Emphasis on Prompt Engineering:

By 2024, industry narratives had begun to shift, highlighting that prompt engineering was always an interim solution, not a permanent practice. UX designers and AI strategists increasingly advocated for intuitive conversational interactions, reflecting a broader shift toward human-centered AI (UX Collective on the fall of prompt engineering%20of%20Prompt%20Engineers)).

Implications for General Users:

For mainstream users, these advancements mean AI is rapidly becoming accessible without specialized training. Natural conversational interactions, voice commands, and intuitive reasoning models allow users to engage AI effortlessly, marking a significant departure from previous demands for prompt engineering proficiency (Towards Dev on conversational AI ease).

Prompt engineering, while still useful in niche professional contexts, is undeniably trending toward obsolescence as conversational and multimodal interactions become standard. This shift signals a deeper realignment in AI toward user-centric design, dramatically simplifying adoption and usability.

These shifts signal a critical inflection point for AI adoption: the future lies unequivocally in conversational and multimodal interaction methods. Continuing to prioritize complex prompt engineering techniques over intuitive user interactions will increasingly limit mainstream adoption and accessibility

The Persistence of Prompt Engineering: A Statistical Snapshot

Despite conversational AI workflows clearly emerging as the more intuitive and user-preferred approach, prompt engineering continues to dominate across educational and professional landscapes. Our research across multiple domains (2020–2025) highlights this persistent disparity:

Conclusion

Prompt engineering emerged as a necessary adaptation to early AI models’ limitations, enabling pioneers and technical enthusiasts to unlock generative AI’s potential. Its widespread adoption from 2020–2025, however, has resulted in an unintended misalignment. As AI systems rapidly evolve, now understanding human intentions through natural dialogue, the detailed, programming-like prompts of the past have become redundant.

To achieve mainstream AI adoption, the industry must abandon the legacy focus on complex prompt-crafting and fully embrace conversational AI interactions. Users across all demographics—especially non-technical audiences—now expect seamless, intuitive communication with AI assistants. The rapid growth of conversational AI tools, like ChatGPT’s record-breaking user adoption, underscores this shift. Companies and practitioners who recognize this reality and prioritize conversational interfaces will significantly reduce cognitive friction, enhance user satisfaction, and accelerate widespread adoption.

Prompt engineering should be recognized as a transitional competence—a historical step toward a more inclusive AI future. The primary focus moving forward must be on intuitive, human-centered AI design, meeting users precisely where they are, without the barrier of specialized prompt knowledge. This transition from engineering bias to human-centric interaction is essential for unlocking AI’s full societal and economic potential.

Bibliography

- Coursera – Prompt Engineering Specialization (2023). Vanderbilt University, Instructor: Dr. Jules White.

Coursera Prompt Engineering Specialization - LinkedIn Learning – "Develop Your Prompt Engineering Skills" Path (2023–2024).

LinkedIn Learning Prompt Engineering Path - IBM Skills Network – "Prompt Engineering for Everyone" (2023). Cognitive Class, IBM.

IBM Cognitive Class – Prompt Engineering for Everyone - Class Central – Prompt Engineering Courses Index (2025).

Class Central – Prompt Engineering - Google – "Prompt Engineering for Generative AI" Documentation (2023). Google Developers Guide.

Google Developers – Prompt Engineering - World Economic Forum – "The Rise of the 'Prompt Engineer' and Why It Matters" (May 2023). Kate Whiting, featuring Albert Phelps, Accenture.

WEF – Prompt Engineer Rise - Medium (Lexiconia) – "The Decline of Hype: Analyzing the Slowing Trend of Prompt Engineering" (Apr 2025). Mohamad Mahmood.

Medium – Prompt Engineering Decline - Medium (Towards Dev) – "You Don’t Need to Learn Prompts to Use AI" (Apr 2025). Mohamad Mahmood.

Medium – No Prompt Needed - Medium (UX Collective) – "The Perennial Art of Conversation Design amid the LLM Era" (Sep 2024). Rômulo Luzia.

UX Collective – Conversation Design - Tim Wappat’s Blog – "Technology Adoption Lifecycle & 'Crossing the Chasm' with AI in 2024" (Nov 2023).

Tim Wappat – Crossing the Chasm - Richard Hutchings (CTO) – "Maximising AI Adoption: Overcoming Barriers to User Engagement" (Apr 2025). LinkedIn article.

LinkedIn – Maximising AI Adoption - Reuters – "ChatGPT Sets Record for Fastest-Growing User Base" (Feb 2023).

Reuters – ChatGPT Record Growth - Reuters – "ChatGPT Will ‘See, Hear and Speak’ in Major Update" (Sep 2023).

Reuters – ChatGPT Multimodal Update - IBM Tech Blog – "What is Chain of Thought (CoT)?" (Aug 2024).

IBM – Chain of Thought - Jean-Charles Dervieux – "Crossing the Chasm: Turning Disruptive Innovation into Mainstream Success" (Aug 2024).

LinkedIn – Crossing the Chasm - Technology Adoption Lifecycle Chart (Craig Chelius, Wikimedia Commons).

Wikimedia Commons – Technology Adoption Lifecycle