The Hidden Bias in AI Training: Why Rigid Prompting Fails Most Humans

There's an unspoken assumption embedded deep within the world of AI training: the idea that what's intuitive and natural for engineers should be intuitive and natural for everyone else. But this assumption creates a fundamental disconnect that hampers AI adoption, leaving millions frustrated and alienated.

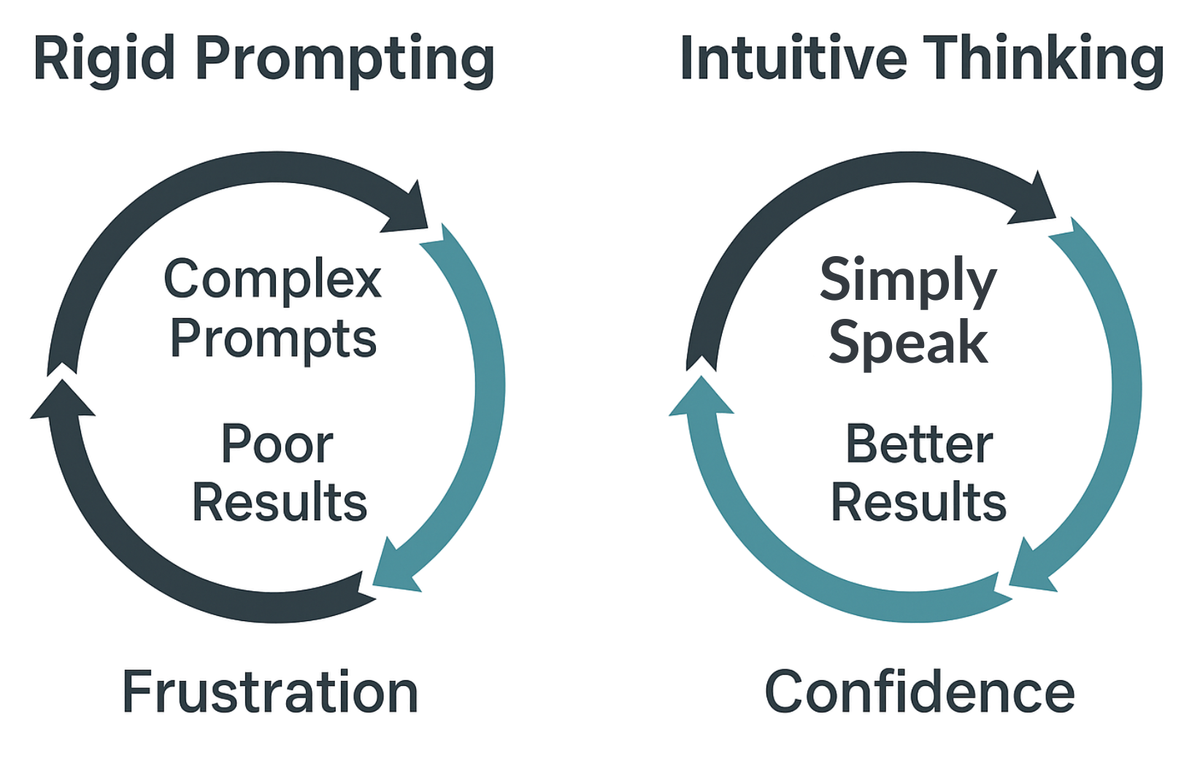

Today's dominant method of AI instruction—rigid, precise "prompt engineering"—feels inherently natural to those who created it: engineers and technical experts. They thrive in structured, highly detailed communication frameworks that mirror their own cognitive and professional patterns. However, for the vast majority of humans—particularly intuitive thinkers, creative professionals, senior leaders, and non-technical individuals—this approach is anything but natural.

When AI experts teach users that precise and structured prompts are the "correct" way to communicate with AI, they unwittingly project their own biases onto others. This "engineering bias" not only creates unnecessary barriers to entry but actively undermines users' confidence and potential. It forces users into artificial modes of communication, distorting their natural thought processes and diminishing their ability to leverage AI effectively.

Ironically, modern AI models like ChatGPT are explicitly designed to support natural, conversational interactions. They excel when users simply express themselves openly and intuitively—without complex scripting or elaborate engineering. These models thrive on natural expression, rich context, and genuine, human-like dialogue.

The result of engineering-biased training is a profound mismatch. AI novices who follow rigid prompting frameworks often find their results lacking nuance, depth, and relevance. They become frustrated, believing the problem lies with their own capability, rather than recognizing they've been taught an approach fundamentally misaligned with their natural strengths.

In reality, intuitive interaction—speaking naturally and authentically—is precisely how these AI models were meant to be used. By encouraging people to engage naturally, we unlock the true potential of AI: deep understanding, nuanced responses, and meaningful collaboration.

The solution, therefore, is simple but critical: we must shift AI training methods away from rigid, engineering-centric prompts toward fostering intuitive, conversational engagement. This means developing training programs and educational content explicitly designed by communication experts, cognitive psychologists, and human-centered AI trainers—not just engineers.

When AI interaction aligns with natural human communication, users feel empowered, confident, and eager to explore AI's full potential. This shift not only benefits individuals but accelerates AI adoption across organizations and societies.

Let's reject the hidden bias embedded in rigid prompting. Embrace intuitive thinking, speak naturally, and unlock the extraordinary potential that AI has always intended to offer.