Humans: Redundant or Relevant?

What Kind of Future Are We Building

AI is transforming the workplace — but is it doing so to amplify human potential, or eliminate their need altogether?

In the rush to scale artificial intelligence, many organizations are prioritizing automation over augmentation. Adversely, “AI-first” is becoming synonymous with “human-last.” And while this shift may promise speed and cost-efficiency, it poses a long-term risk to innovation, resilience, and inclusion.

This pervasive trend is forcing us to get serious about answering the question: What kind of future are we building with AI — one where humans are made irrelevant, or one where human potential is amplified?

Human Redundancy vs. Human Augmentation

Human redundancy is the design of systems with the intention of reducing or eliminating the need for human involvement. It’s driven by a belief that efficiency is achieved by minimizing human input altogether.

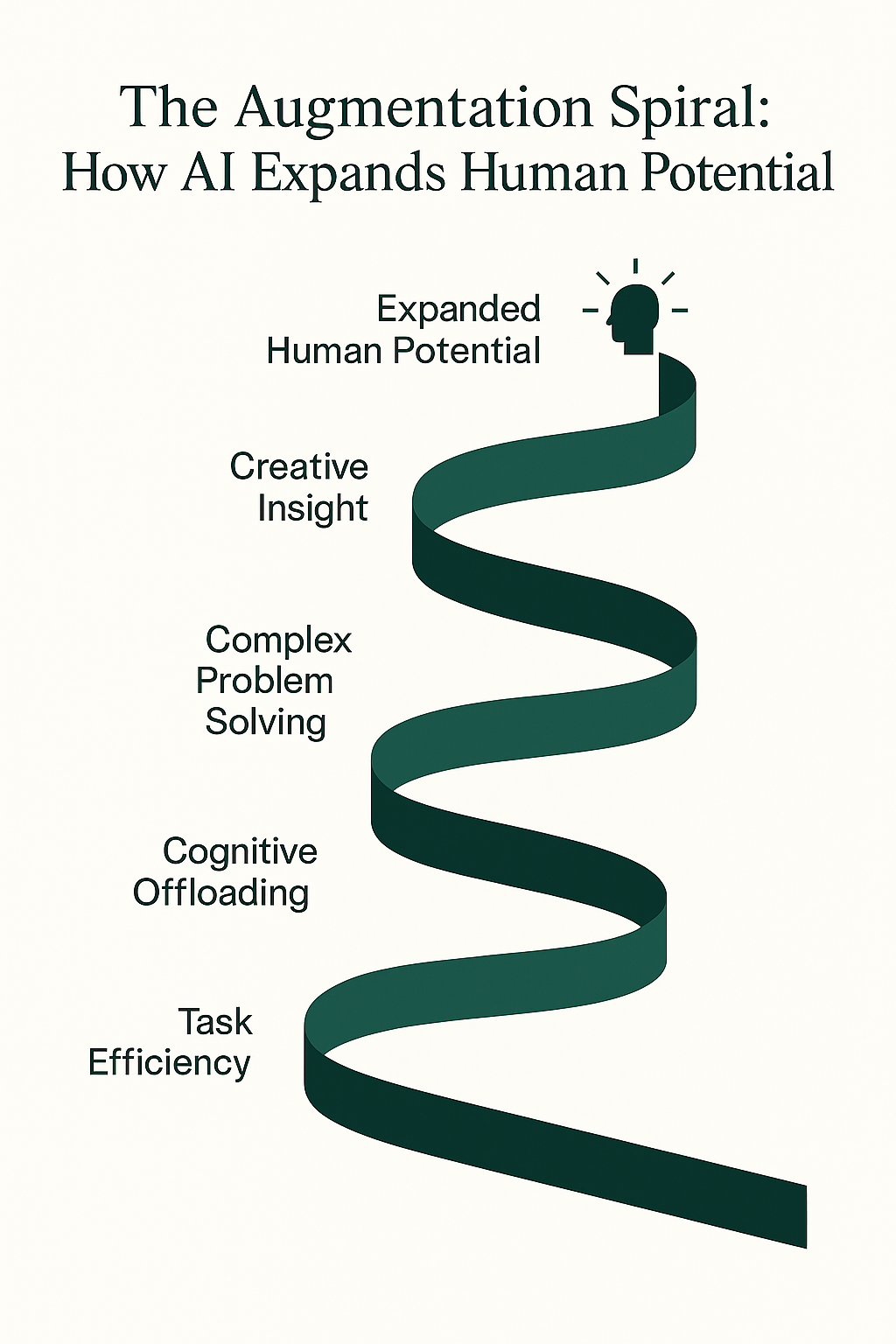

Human augmentation is the intentional design of tools, workflows, and systems that expand human capacity — making people more effective, more creative, and more supported in their decision-making.

The difference is not just philosophical. It impacts product design, team structure, training investments, and the emotional relationship people have with the tools they use.

3 Forces Driving Redundancy Over Augmentation

#1 Investor Incentives Are Aligned With Headcount Reduction Most AI funding models are built on the promise of lower costs through leaner teams. That means startups — and even enterprise teams — often feel pressured to deliver tools that “replace” roles rather than support them. This creates a wave of tools optimized for eliminating people, not elevating them.

#2 Design Choices Reflect a “Userless” Future Many tools assume a single user type: technically fluent, fast-moving, and comfortable with logic-first interaction. As a result, emotional nuance, strategic scaffolding, and collaborative intelligence are left out of the product roadmap. We’re building tools for machines, not humans.

#3 Workforce Displacement Becomes a Feature, Not a Flaw Instead of seeing layoffs or role collapse as a red flag, the automation-first mindset sees them as a sign of success. In this model, fewer humans equals higher efficiency — even if it means eroding morale, losing domain expertise, or widening existing skill gaps.

Augmentation Needs to Become the Focus

Shifting toward augmentation isn’t just ethical — it’s economically smart.

- It increases resilience. Teams that integrate AI to support decision-making, strategy, and creative work are more adaptable in fast-changing environments. They respond better to disruption because they’re not leaning entirely on automation.

- It leads to better outcomes. When humans are part of the loop, tools are calibrated with real-world nuance and empathy. This is especially critical in sectors like healthcare, education, customer support, and leadership.

- It protects institutional knowledge. Augmenting experts — instead of replacing them — ensures continuity, depth, and long-term capability building.

Augmentation in Action: Real-World Use Cases

Here’s what augmentation looks like in practice:

- Healthcare: AI helps doctors surface diagnostic options faster, but decisions still rely on human expertise, bedside manner, and ethical judgment.

- Education: AI tools personalize student learning paths, while teachers spend more time on engagement, feedback, and mentorship.

- Marketing: Strategists use AI to generate content variations or analyze trends, freeing them to focus on messaging and brand development.

- Facilitation: Workshop leaders use AI to design session agendas, generate brainstorm prompts, and capture insights — so they can stay present with the group.

- Coaching: Executive coaches use AI to synthesize notes, highlight patterns, and suggest growth frameworks — enhancing client breakthroughs, not replacing them.

This is not “AI doing your job for you.” It’s AI helping you do your job better.

What Needs to Change

To move from redundancy to relevance, we need shifts at every level:

- Design: Tools must be built with human input, strategic collaboration, and emotional nuance in mind.

- Policy: Regulations should support reskilling, ethical AI use, and transparency around automation.

- Training: Education must prepare professionals to work with AI as a partner, not fear it as a threat.

The Bottom Line

The future of AI isn’t just about what tools can do. It’s about what kind of future we choose to build with them.

Will we design systems that erase humans from the process — or systems that help people rise into their most strategic, creative, and meaningful contributions?

This is the fork in the road. And. for now, the choice is still ours.